Tuesday, November 12, 2024

GAS: Subnet 34 Becomes a Generative Adversarial Engine

The next evolution of decentralized AI Generated Content (AIGC) detection

tl;dr

- Miners can submit detector & generator models. No live endpoints.

- Validators batch-evaluate on a public, auto-evolving adversarial benchmark.

- No registration wars. Full model privacy. Open data.

- Top models deploy in production via BitMind for enterprise use.

1. Why we rebuilt Subnet 34

Subnet 34 began as a distributed inference network for AIGC detection — a “subnet-as-infrastructure” design. It worked, but it carried structural costs that limited scalability and competition dynamics that distracted from what we actually wanted to measure: model performance. Miners kept GPUs online 24/7 to serve API traffic and validator challenges.

This created three competing signals, only one of which mattered:

- Model accuracy — detecting AI vs. real across images and videos (what we actually care about)

- Uptime and throughput — surviving QPS spikes of 1 k + without dropping requests.

- Registration pressure — miners racing to register or re-register to dominate limited slots.

Key drawbacks emerged:

- High mining cost: constant uptime meant constant expense, compounded by periodic registration wars.

- Adversarial behavior: coordinated denial-of-service attacks and slot monopolies distorted fair competition.

- Lack of data privacy: enterprise partners couldn’t send sensitive media to unvetted miner endpoints.

- Unnecessary latency: every request traveled the full client ↔ API ↔ validator ↔ miner loop.

We stripped out everything that didn’t measure model quality and rebuilt SN34 as GAS: a self-improving adversarial engine where intelligence, not infrastructure, wins

Adding a generative side was the natural next step.

Discriminators define truth; generators break it, by submitting high-signal synthetic data, they continuously refresh the benchmark and force organic incentive evolution, outpacing any manual tuning.

2. Introducing GAS — the Generative Adversarial Subnet

GAS replaces remote inference with model submission.

Instead of hosting live endpoints, miners now package and submit discriminator models for evaluation. The evaluation pipeline uses a comprehensive, dynamic benchmark dataset that includes fresh adversarial media produced by generative miners.

Model submission and the introduction of GAS came together by design. With both generators and discriminators operating on the subnet, requiring model submission prevents collusion between the two roles. A miner controlling both sides could otherwise track the media they produced and use additional. Requiring instead that models are submitted isolates inference, ensuring that a miner’s solution is in fact a performant classifier and not a vector database lookup in disguise.

Core mechanics

- Discriminators are scored on accuracy against the full, auto-updating corpus at huggingface.co/gasstation.

- Generators earn rewards when their samples fool the current leaderboard.

- Submission isolates inference wherin a miner cannot pair its own generator with a detector that “remembers” its outputs.

This shift also resolved several long-standing issues from the previous design and introduced new flexibility. GAS ends registration wars by tying standing to quality, not slots (generation’s heavy workloads further deter multi-axon abuse); rewards only unique, high-performing architectures by exposing weights for deduplication; cleanly separates evaluation from production APIs; delivers stable, comprehensive benchmarking free of live-traffic noise; slashes operating costs by eliminating 24/7 endpoints; and keeps enterprise traffic and latency within BitMind’s private infrastructure.

GAS also changes how synthetic data enters the ecosystem.

Under GAS, generative miners contribute their outputs to a public corpus hosted at huggingface.co/gasstation. This collective repository ensures that all participants—and the broader research community—share access to the same, openly available data foundation. It levels the playing field, supports reproducibility, and turns individual mining effort into a shared contribution to the study of generative and synthetic media.

By restructuring how work is submitted, evaluated, and shared, GAS removes the overhead that once stood between miners and what they were already trying to do—advance their models.

3. The Adversarial Loop

Subnet 34 operates as a distributed adversarial system inspired by the original Generative Adversarial Network framework introduced by Goodfellow et al. (2014)—two models in competition, each improving through the other’s progress.

SN34 extends that idea to a network scale, distributing the discriminative and generative adversarial roles across independent participants.

All media produced by generative miners is hosted at huggingface.co/gasstation as continually expanding, publicly available datasets. Validators regularly refresh this dataset with verified, contributions from generative miners; discriminators are then scored on how well they detect them. The loop renews itself continuously, surfacing new edge cases and driving both sides toward stronger representations.

This feedback loop also creates a new technical challenges: validating synthetic data quality.

Without reference ground truth, the subnet can’t directly measure whether a sample is “correct.” For now, validators perform prompt-alignment checks, comparing each sample’s semantic and visual features to its input prompt. Future work will extend this process with additional reference-less metrics, including:

- C2PA provenance and watermark verification, rewarding submissions from verifiably state-of-the-art or traceable generators.

- No-reference visual quality estimators such as CLIP-IQA, providing quantitative signals of realism and perceptual quality.

These mechanisms will make generative rewards more selective and increase the overall signal-to-noise ratio of the benchmark.

Because generator incentives are tied to producing data that actually fools discriminators, benchmark difficulty scales automatically. As discriminators improve, generators must evolve, and the competitive surface adjusts on its own.

Over time, this produces two converging effects:

- Discriminators that generalize better across unseen synthetic distributions.

- Generators that yield increasingly realistic, diverse samples leveraging any available open source models or third party services—useful not only for evaluation, but as open, renewable training data for the broader field.

4. Evaluation and Incentives

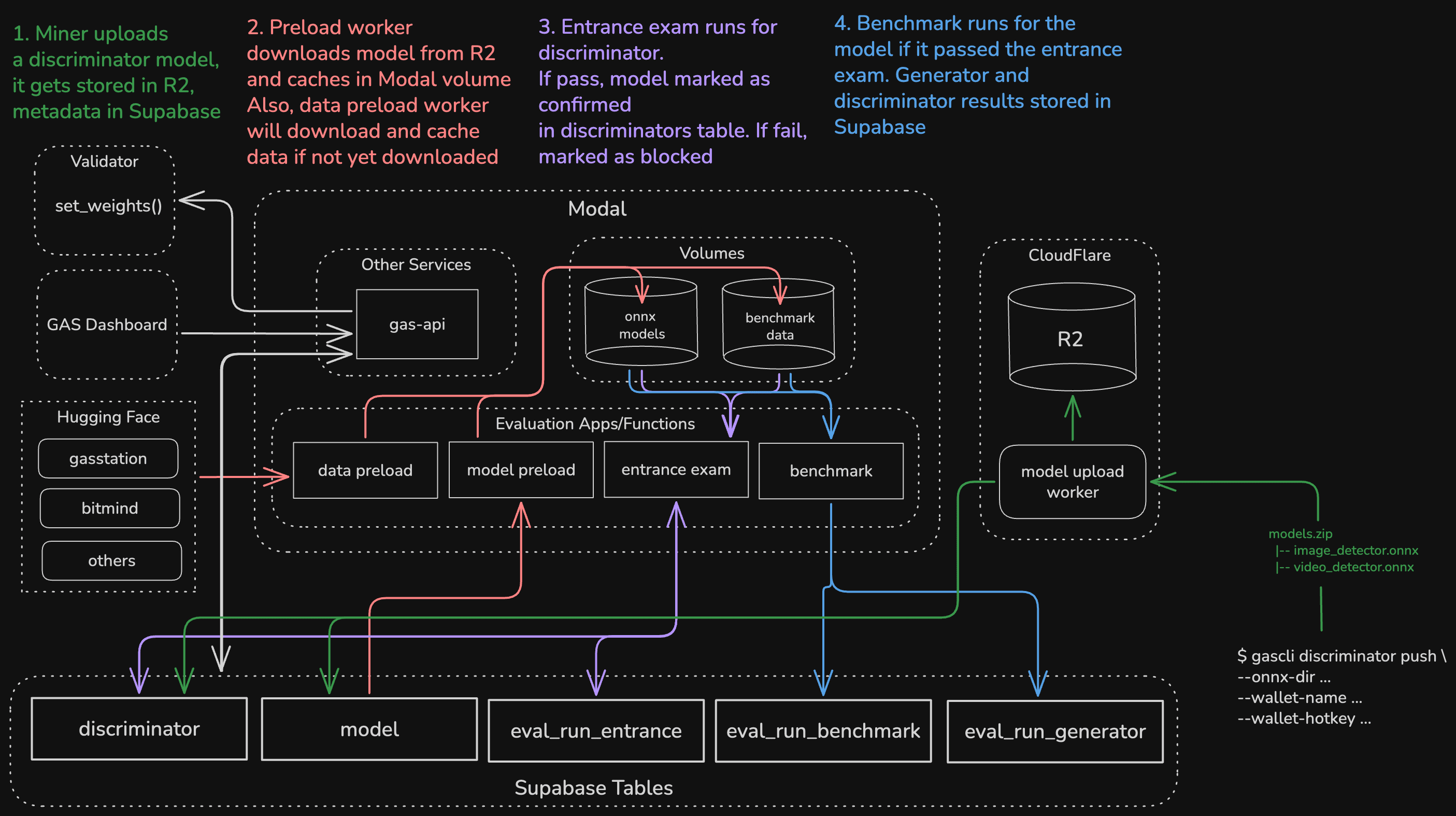

Upon submission, discriminator models are screened with an entrance exam using a subset of the available benchmark data. Models with performance below a predefined threshold are blocked from further evaluation, ensuring compute is reserved for competitive models.

Because evaluations are batch-based rather than dripped gradually among live traffic, results are consistent and statistically stable.

Generator evaluation relies on whether their submissions pass validation checks (e.g. prompt-alignment), and how often they fool top discriminators. The more convincingly a sample passes as real under current detectors, the higher its reward potential.

This approach turns generator progress directly into benchmark difficulty, which then feeds back into discriminator training (as the data are publicly available).

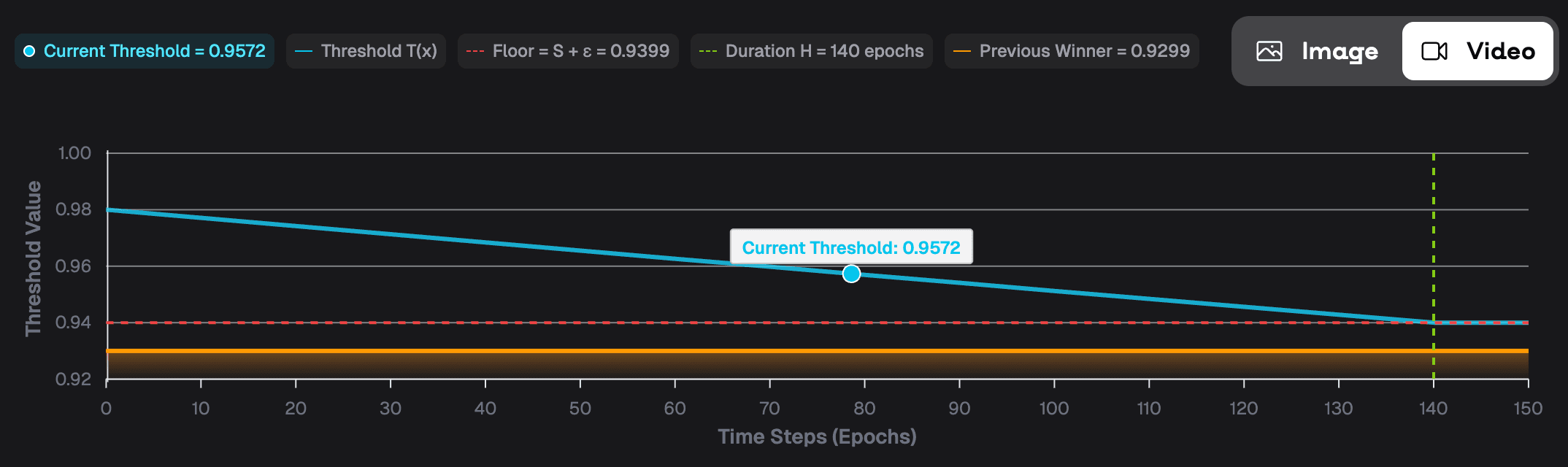

To translate these metrics into discriminator rewards, GAS uses a Winner-Takes-All (WTA) mechanism with a decaying threshold.

When a model surpasses the current best score S, a temporary boost is applied—raising the threshold to S + boost. That threshold then decays toward S + ε over time, ensuring that subsequent improvements are meaningful but not impossible.

Specifically, the threshold function is defined as:

| Symbol | Meaning | Typical value / logic |

|---|---|---|

| S | New leader’s score (e.g. 0.87) | Current winning performance |

| ε | Floor margin | Keeps a minimum gap above (S) once threshold decays — e.g. use 0.01 ⇒ floor = S + 0.01 |

| Δ | Improvement amount, (S - S_{prev}) | How much the new leader improved over the old one |

| boost | Initial jump applied to the threshold | $\min(\text{cap}, g \cdot \Delta)$ |

| g | Gain factor on improvement | e.g. 2.5 |

| cap | Maximum allowed boost | e.g. 0.05 |

| k | Decay rate constant | Chosen so that threshold lands exactly on the floor after (H) epochs |

| H | Duration (epochs until full decay) | e.g. 140 epochs ≈ 1 week |

Each modality maintains its own discriminator WTA track, allowing them to progress independently. Incentives on both sides remain aligned with the subnet’s digital commodity:

- Discriminators are rewarded for measurable accuracy gains on standardized data.

- Generators are rewarded when they successfully challenge the status quo by producing realistic, high-signal data.

Together, these two halves of the system form a closed loop.

5. Infrastructure and Enterprise Integration

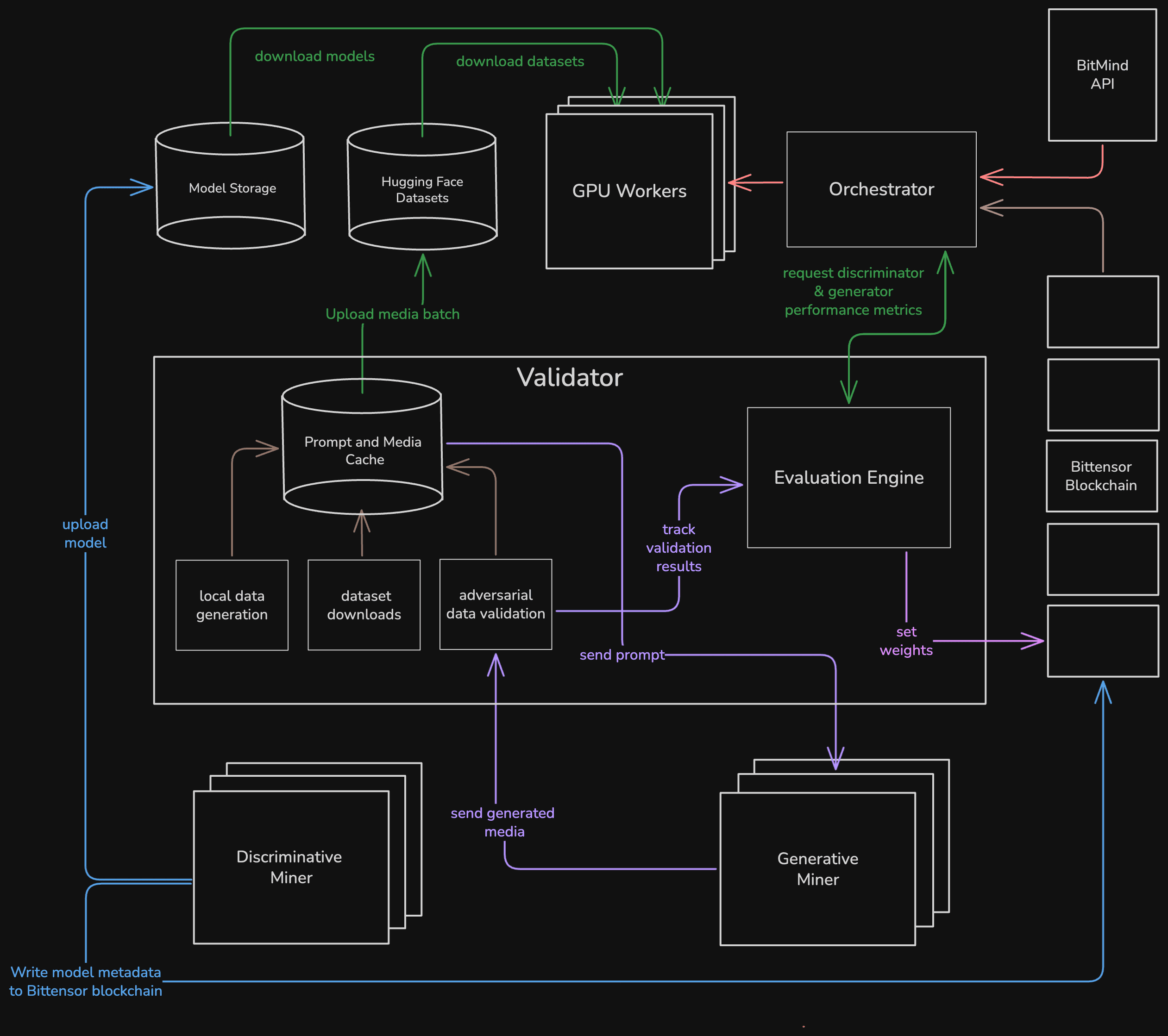

Evaluation orchestration

The model-submission architecture behind GAS necessitates a significant layer of orchestration and tracking.

Every model submitted to the subnet enters a managed evaluation pipeline that handles queueing, container isolation, and deterministic reproducibility.

A submission first passes a lightweight entrance exam designed to screen out non-functional or underperforming models before they consume full-benchmark compute. This job also collects profiling statistics—VRAM requirements, inference latency, and throughput—that inform downstream GPU scheduling.

Each discriminator evaluation then runs on a dedicated GPU worker node, provisioned automatically with hardware and memory specifications derived from those profiling results.

This ensures each benchmark executes under optimal conditions while keeping resource allocation predictable across submissions.

Performance telemetry, including GPU utilization, runtime, and per-sample throughput, is continuously logged and versioned alongside benchmark results.

Together, these elements form a complete audit trail for every evaluation run.

Internally, the subnet uses the same stack that powers BitMind’s enterprise services:

- CUDA-enabled evaluation pods managed through container orchestration,

- Postgres for metadata and benchmark indexing,

- Cloudflare R2 for storing evaluation artifacts and logs.

- HuggingFace for raw image/video data storage

For miners, this design removes the operational burden of maintaining uptime, infrastructure, or serving endpoints.

For subnet operators, it provides a reproducible evaluation fabric that scales with submission volume and enforces consistency across the entire network.

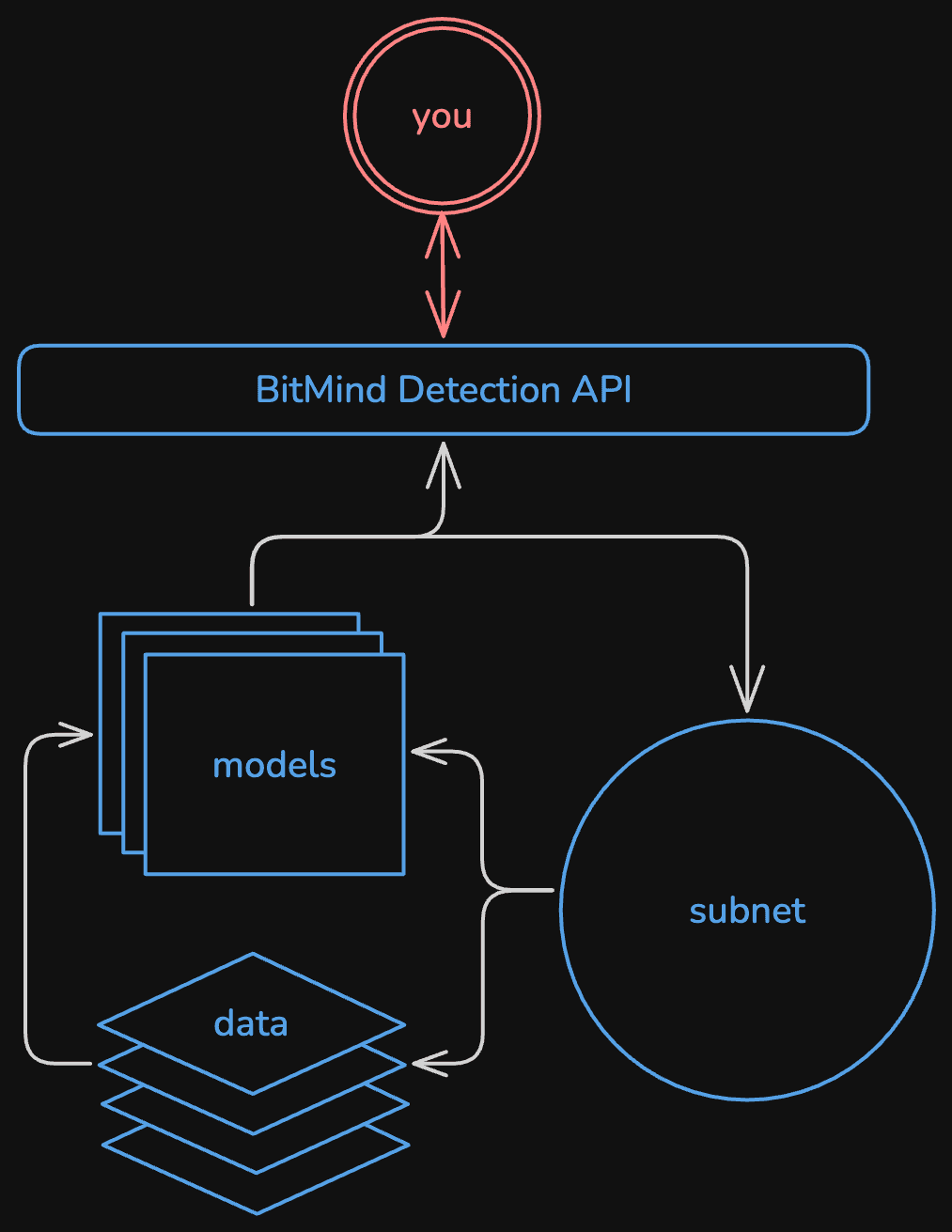

Enterprise Isolation and Compliance

Model submission also introduces a clean separation between evaluation and inference.With top performing models deployed on off-chain BitMind infrastructure, API traffic never touches subnet nodes, preventing customer data from reaching untrusted environments.

This isolation is what makes enterprise use of SN34 models viable.

Discriminative mining remains fully decentralized in participation, but centrally governed in model and data handling. Every model submission, benchmark, and artifact is versioned and traceable through its hash, benchmark version, and timestamp. This provides reproducibility, audibility, and verifiable lineage for all subnet outcomes.

GAS therefore bridges decentralized model competition with enterprise-grade data controls: open innovation on one side, strict privacy guarantees on the other.

6. Positioning in the deepfake-detection market

Deepfake detection today splits along two paths:

- Closed commercial APIs: stable but opaque, limited visibility into how model evolution.

- Academic research models: transparent but impractical for production, often frozen after publication.

Subnet 34’s Generative Adversarial Subnet sits between these extremes. It maintains open competition and evolving benchmarks, but the validated models themselves can be privately hosted within BitMind’s infrastructure or a customer’s secure environment. The connection between the subnet and enterprise services becomes seamless:

- Predictable performance. Enterprises use the same models that topped subnet leaderboards, but under fixed, version-pinned conditions.

- Controlled upgrade paths. New benchmark winners can be adopted automatically or only after internal evaluation, depending on the client’s governance policies.

- No operational overhead. BitMind manages GPUs, orchestration, and updates; customers consume the models as a service.

This structure turns SN34 from a public competition into an R&D pipeline that feeds directly into production-ready detectors.

The subnet continues to generate and validate models openly, while enterprise partners benefit from that progress through secure, private deployments rather than API calls that interact with subnet nodes.

In this way, SN34 bridges open innovation and operational reliability—the subnet produces the intelligence, while private hosting delivers it safely to where it’s needed.

7. Conclusion

Subnet 34 has transformed from a distributed inference network into GAS—a self-sustaining Generative Adversarial Engine that co-evolves detectors, generators, benchmarks, and data in a single, incentive-aligned loop.

- Generative miners push the frontier of synthetic realism, publishing every breakthrough to the open corpus at huggingface.co/gasstation.

- Discriminator miners adapt instantly, submitting isolated models that are batch-evaluated on the hardest, freshest adversarial data.

- Validators orchestrate reproducible, versioned runs; winner-takes-all rewards with decaying thresholds keep progress incremental yet relentless.

- BitMind takes the validated leaders off-chain, hosting them privately for enterprise partners under strict isolation, auditability, and upgrade governance.

The result is a continuous learning system where no benchmark ever expires, no dataset ever silos, and no enterprise ever exposes sensitive media to the open network. Research feeds deployment; competition fuels reliability; decentralization powers trust.

Call to Action

Miners

Submit your first discriminator or generator today at app.bitmind.ai. Top the leaderboard, earn rewards, and see your model harden the global standard for computer vision detection.

Enterprises

Book a 15-minute call of and learn about our enterprise services and see exactly how we can protect your business: bitmind.ai/product/custom-solutions

8. What’s next

The next phase of SN34 development focuses on extending the subnet’s reach from benchmarking into deployment—making it easier for enterprises to adopt, audit, and operate models derived from the network.

Several priorities are already in motion:

-

Tiered private hosting

Enterprises will be able to host validated models in three modes:

- Fully managed — models served from BitMind infrastructure with dedicated compute.

- On prem — models served from an organization's own infrastructure, managed by their own operations and security controls.

- Offline — downloadable, signed model bundles with expiration and provenance metadata.

-

Advanced data-quality metrics for generator incentives

Validators will incorporate C2PA provenance checks and no-reference metrics such as CLIP-IQA to refine generator rewards and further improve benchmark signal.

The long-term goal is simple: turn SN34 into the default engine for trustworthy AIGC detection—an open network that continuously produces verifiable models and delivers them securely through private, production-ready infrastructure.